Introduction

This project was developed as part of a competition hosted by Steve Rabin, author of the Game AI Pro series and organizer of the GDC AI Summit. The goal was to develop the most optimal and performant A* algorithm. The demo features an AI agent navigating an environment while avoiding obstacles and following the optimal path. To enhance performance, I implemented various optimizations that served to improve memory usage and algorithmic efficiency. Additionally, I applied techniques like rubberbanding and path smoothing to create smoother, more natural movement. My implementation ranked among the fastest in the competition, earning me a tour of Nintendo's Redmond headquarters with Steve Rabin.

Optimizaion Techniques

Optimizing the A* algorithm primarily revolves around improving data read and write speeds. To maximize performance, I focused on both memory efficiency and algorithmic optimization, reducing cache misses and improving lookup speeds. I implemented a heap-based priority queue and replaced boolean arrays with bit fields to minimize memory usage and enhance data access efficiency.

Bytes and Bit Fields

Since not every tile on a terrain is valid walking space, each node must track its eight neighboring nodes to determine which are accessible during pathfinding. A straightforward approach might involve using an array of booleans to store whether each neighboring node is valid. However, there is a more efficient way to achieve the same result.

Because each node is either valid or invalid and has only eight neighbors, we can store this information in a single byte instead of using an array of booleans. This approach offers several advantages. First, by using a single byte rather than an eight-byte boolean array, memory usage is reduced by 87.5%. Additionally, since a byte consolidates multiple flags into a single unit, it improves cache locality, reducing cache misses and increasing processing speed.

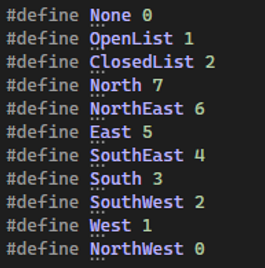

In the A* algorithm, open and closed lists track which nodes are available for exploration and which have already been processed. To improve efficiency, I use a bit field to store a node’s list status. A bit field is a class data member with an explicitly defined size. In my implementation, each node has a two-bit integer field, allowing it to store values from 0 to 2—where 0 means the node is on no list, 1 indicates it's on the open list, and 2 signifies it's on the closed list. While I still maintain a separate open list for storing and updating nodes, using a bit field allows for quick list status checks without searching through the open list, improving performance.

Optimizing the Open List

One key advantage of the A* algorithm is its ability to replicate realistic navigation by always prioritizing movement toward the desired goal. This behavior is driven by the open list, which keeps track of all nodes that can be explored from the agent's current position. These nodes are ordered based on their proximity to the goal, ensuring an efficient and directed search.

However, a challenge arises when the searchable area becomes too large, causing the open list to grow significantly. Since the open list must be reordered every time a new node is added, maintaining its efficiency is crucial for the algorithms performance. To address this, I needed a way to optimize both insertion and retrieval times. Implementing a priority queue proved to be the ideal solution, as it allowed for efficient sorting and ensured the algorithm ran smoothly.

Node Comparison

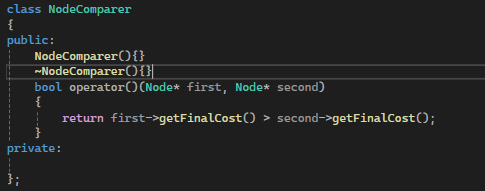

Before I could begin implementing the priority queue for the open list I fist needed to establish a function that would allow me to compare two nodes so that the priority queue would know how to sort the nodes on the list.

Implementing the Priority Queue

For the purposes of the A* algorithm the priority queue needed to be able to do 4 things

-

retrieve the most node with the most proximity to the goal

-

Insert a new node into the queue and reorder the list then after

-

Update a node that is already in the queue and then order the list

-

Determine is the are any nodes on the list

Although C++ does have a priority queue container, I was unable to make use of it for this project since it does not feature a way to update a node already on the list. A work around for this could have been achieved by finding the desired node, updating it, and then removing and reinserting all nodes on the list but this would have been very inefficient. Fortunately I was able to easily create a custom open list using STL that could efficiently preform all the necessary tasks.

The container that actually holds all of the node pointers is simply a vector, but the STL functions preformed on it result in it functioning as a priority queue that is organized as a heap.

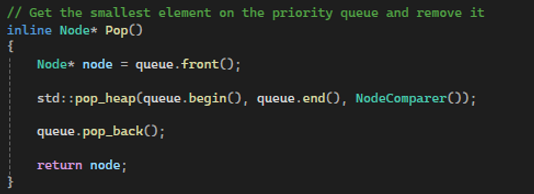

The Pop function works by first getting the element at the front of the queue (the element with the closes proximity to the goal). Then it calls pop_heap to move that first element to the back of the queue and ensure that the rest of the elements are still properly ordered. And lastly it called pop_back to actually remove the first element with the closes proximity to the goal (remember that pop_heap moved this element to the back of the list).

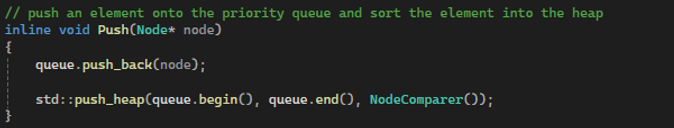

The Push function works by simply pushing a node onto the back of the queue and then calls push_heap to sort the new node onto the heap.

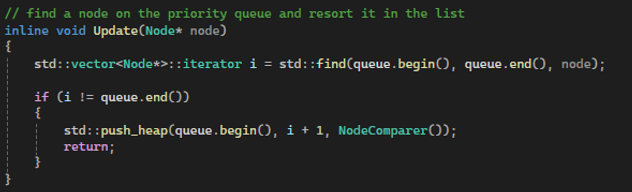

The Update function is the most complex of the 4 functions. It works by using the std::find function to find the desired node in the queue. Then if the node was found it uses push_heap to resort the queue from its position in the heap. Note that we aren't updating the nodes value in this function, but are just updating its position in the queue. This is because this function is only ever called after we have already updated a nodes value during the search loop.

The isEmpty function is simply a wrapper that returns if the queue is empty or not